Introduction

This book is for curious software developers of all types. It doesn’t matter which language you use and the industry you work in is irrelevant; what does matter is your interest in why things are the way they are. You will find much more than object-oriented design patterns here as the problem we face is far broader.

This book is for developers who have heard of, used, or even read up on design patterns but think something is missing or needs correcting. It is also for those who wish to know why this can still be the case. Furthermore, it is for those individuals who love design patterns and want to know how to extend their benefits. This book is for developers with little to no experience in design patterns who wish to avoid their pitfalls, and it can benefit anyone who has worked with patterns for a while but was surprised when their purported values did not emerge. It is for those who like the design on the cover and think it would look nice on their bookshelf.

In these pages, I hope you will find practical tips and useful takeaways, but this is not a how-to manual. Instead, think of this as a book that explains the principles you can use in the following ways:

- To build a toolkit of techniques to dissect existing patterns

- To learn how you can better repair broken things when you see them

- To uncover ways to correctly identify whether they are broken in the first place.

In short, if you have opinions on design patterns, this book can help you justify them. If you don’t, you might just begin to develop some. Additionally, as a bonus, you will discover how to get the most out of them, regardless of their flaws.

As the story unfolds, the foremost players will be an architect, a small group of software developers, a movement populated by hundreds of developers, and the way in which the world reinforces our actions.

The architect

Christopher Alexander was the architect. He was the central character for the

initial discovery of patterns and set the course of pattern history. Although

he wasn’t a software developer by any means, he was fascinated by the

possibilities presented by developing software using a pattern-language

approach. His book

The software developers

A small group of software developers became known as the Gang of Four, often

abbreviated to the GoF. Together, in 1994, Erich Gamma, Richard Helm, Ralph

Johnson, and John Vlissides produced an incredibly successful book called

The movement

The software-design-patterns movement was real and still exists to this day, but it is now far more low-key. At its height, hundreds of developers contributed to it and spread the word to anyone who would listen. In addition, the movement rippled out into other areas of development, such as education, and organisational change. As the hype receded, only a few remained faithful to the broader pattern movement.

The world

The last component of the story is the world itself and how natural laws of emergent behaviour clashed with many of the expectations and needs of those involved. This final element—the emergent behaviour of complex systems—brings us back to the start. Christopher Alexander’s first work sought to tame complexity in intensely interconnected projects and guide the emergent properties of larger systems to positive conclusions.

Our journey

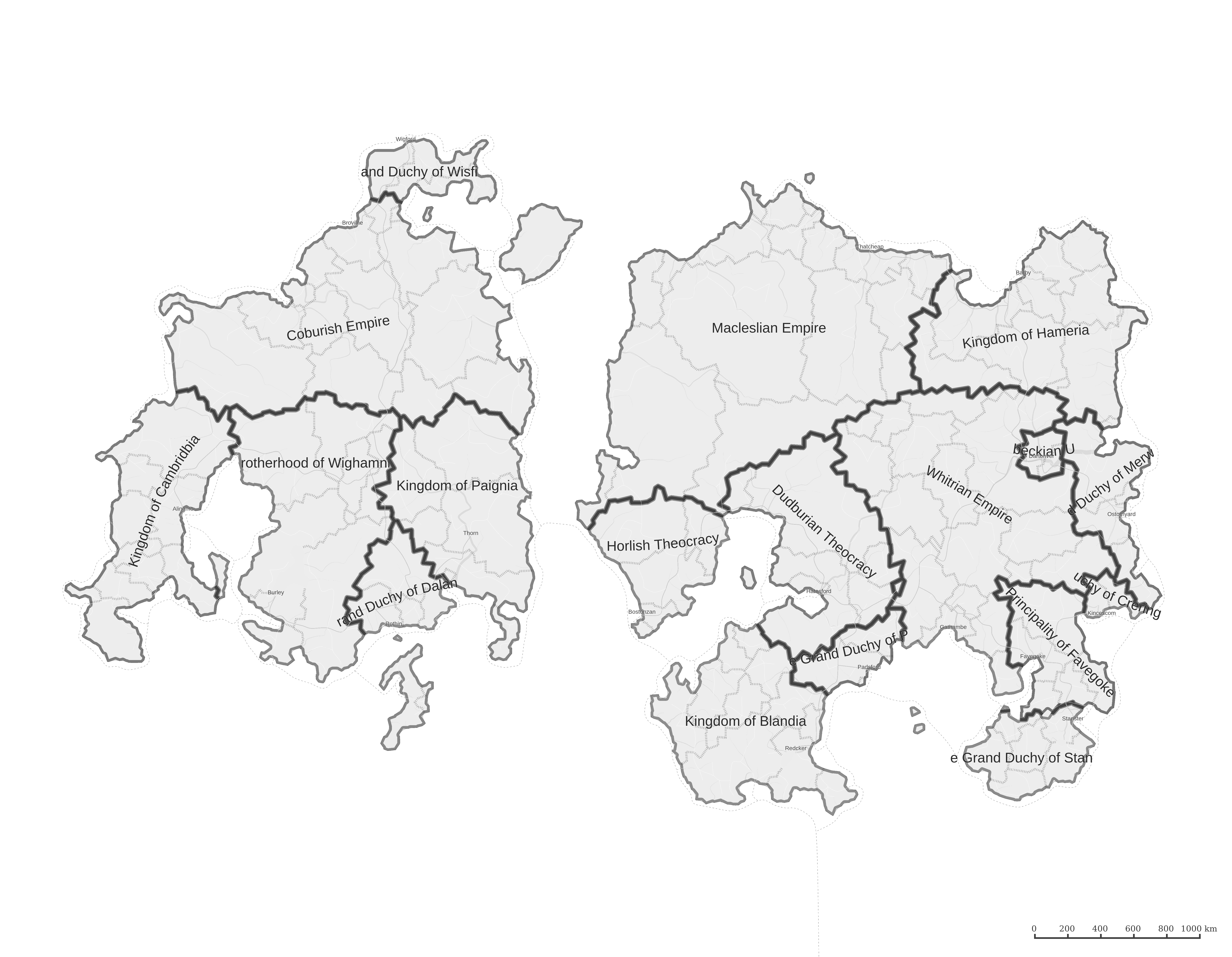

During this voyage, I must reference patterns from software development and

physical architecture. The majority of the physical architecture patterns

identified by Christopher Alexander and his colleagues are from the book

Before we begin the journey, we should take a look at the road map. In the rest of this introduction, I intend to highlight the kinds of questions that I later attempt to answer—this is so that you know where we’re going. The terrain will get a little rough at times—and there are no well-worn tracks in many places; unfortunately, this means that it will be necessary to explain some of the historical and theoretical elements of the topic along the way. I won’t try to convince you of anything nor attempt to directly answer any questions in the remainder of this introduction; only clarify what I intend to address by the end of the book.

Which patterns?

We should start with a simple question for software engineers who already know

about design patterns: how many design patterns are there? Most would give an

answer of 22 or 23, depending on whether they’re brave enough to include the

- What are the 23 design patterns?

- What are the 3 basic categories for design patterns?

However, most software engineers (and leading search engines) get this figure

wrong, as there are many more. If you’re in the camp that thought there was a

higher number, you might be thinking about the

Nevertheless, these books only cover a few hundred examples. Unless your answer

was in the thousands, you were still way off. In fact, you would have been

conservative in your estimate in the year 2000; if you only include the list of

published patterns, the number already exceeded a thousand3 as

is evidenced in those collected in

This raises another issue. The almanac only accounted for the patterns discovered and published in and around software development. Many people forget that other areas are equally important to software developers:

- Learning and teaching patterns.

- Patterns of people management and organisations.

- Patterns of bringing about change.

The belief that patterns are limited to software engineering, restricted solely to the domain of object-oriented design, and constrained to patterns of implementation, is an incredibly narrow viewpoint. It would be like claiming you know how to cook when your culinary expertise only stretches to five different ways to make eggs on toast.

So why do we collectively believe there are only 22 or 23 design patterns for software when there are actually so many others out there? Moreover, if so many patterns exist and more are being found all the time, where are they? I will answer these questions and also explain how this state of affairs was somewhat inevitable.

There was a place—the original wiki—which kept track of patterns and the conversations around them. If you have heard of this site, which is known as the Portland Pattern Repository, you may also know that it hasn’t been updated in some time. Why is this the case? There is also the Hillside Group, which maintains a website with many (often dead) links, but they’re not as famous as the GoF book[GoF94]. Why does an exhaustive pattern catalogue for everyone to use not exist?

While a definitive answer explaining why this didn’t happen in the past would be impossible to provide, I will reveal the many forces at play that continue to make it an unlikely event. You will learn how those forces affect the established software development practices but also those processes and methodologies only recently adopted. In addition, you will learn how physical architecture and building development are similarly affected.

The

I counted 1007, but there are quite a few, so I could be wrong.

Language

If you look at any modern and reasonably sized piece of software, you will

surely come across something with the word factory in the name. You might also

find a few singletons in the code. The larger the codebase, the more likely it

is that you will see recognisable object-oriented design patterns. You may

even note a query_decorator, a list_iterator, a status_observer, and possibly

even a page_builder or two. These pattern names have become thoroughly

entrenched in our software development language.

Though not as widely studied these days, design patterns are regularly used by people who have yet to see a copy of the GoF book[GoF94]. The names of these patterns are baked into tutorials, how-to guides, and example code, all of which people copy and paste into their projects.

Design patterns first appeared in

However, there is a downside to the language-like qualities of patterns; they become less useful in the long run. The benefits of structure and communicability also have their pitfalls, as they lead to a loss of essence when referred to by an arbitrary handle.

As an example of the weakness of words, what does the word ‘literally’ mean to people now? In the past, it used to mean ‘real’ and ‘actual’, not the imprecise term it has now become. Additionally, what does ‘done’ mean to the people you work with on different projects? Language evolves. Sarcasm aside, words and their meanings are relative to the environment and the participants in that environment. We need to recognise that definitions are not eternal and immobile—but rather cultural and flexible.

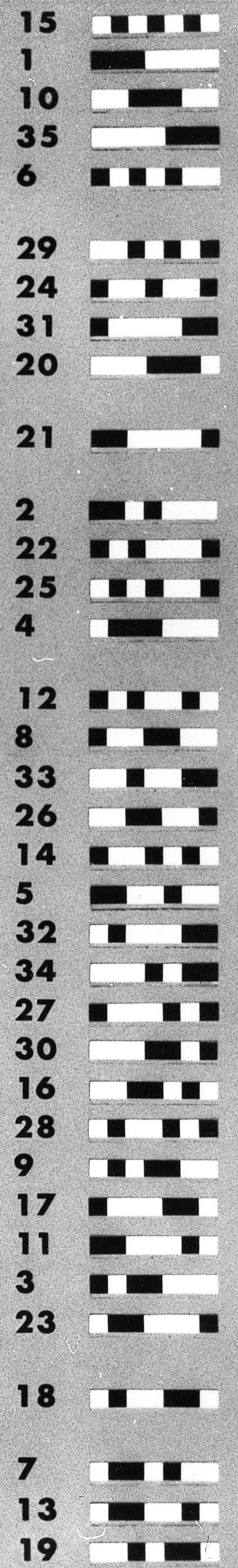

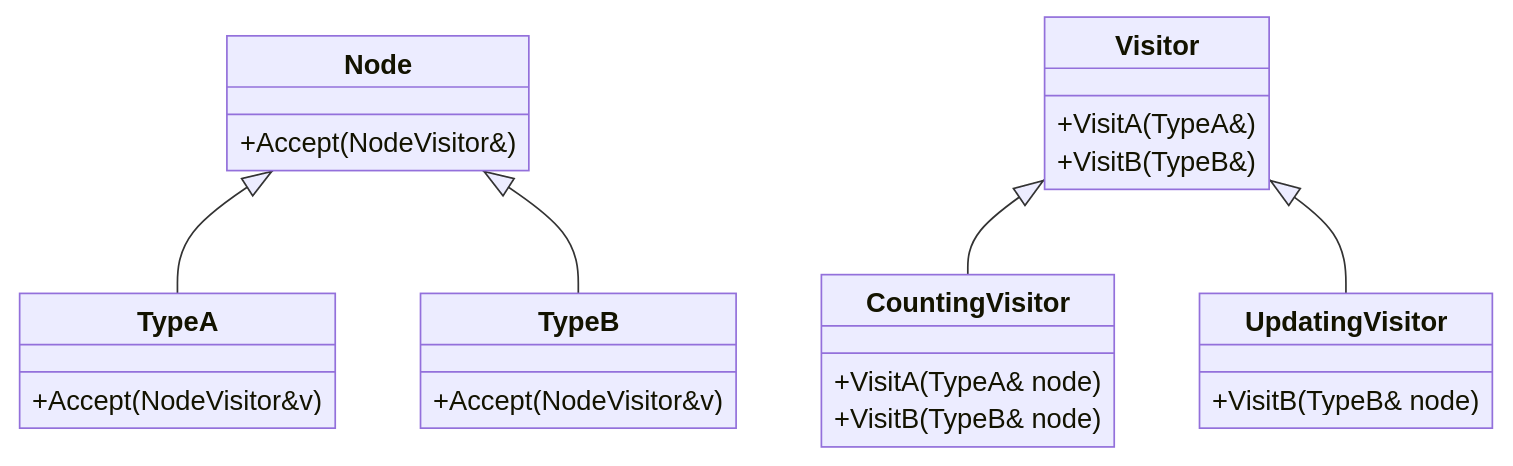

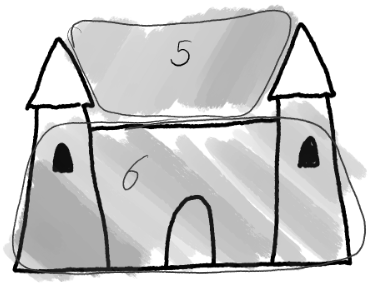

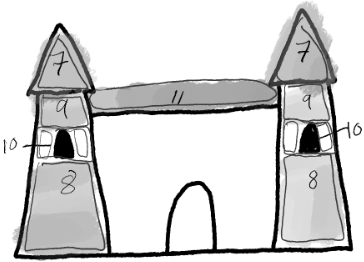

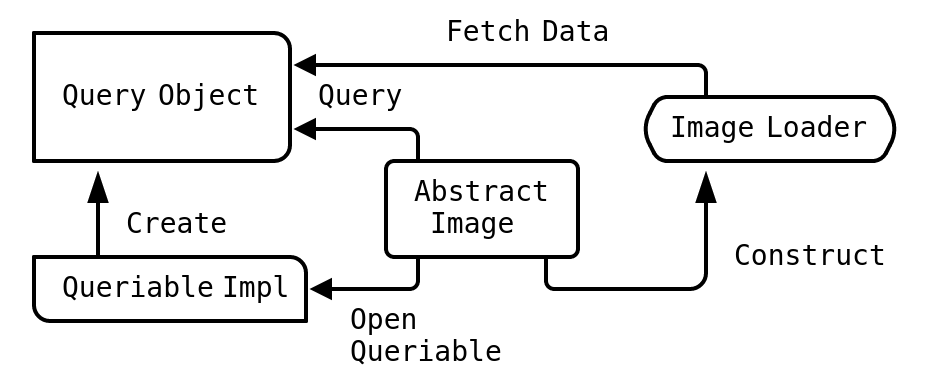

The GoF Visitor.

The GoF Visitor.

The books published in the 1990s were full of recognised patterns—and

pattern authors gave names to each of them. The names stuck around, but our

understanding of what those patterns mean has drifted. When we copy patterns

without completely understanding them, their meaning mutates. If we copy a

visitor pattern from an implementation that walks a structure of composite

objects, but we fail to see the details of the typed callbacks, the pattern word

will now mean something else.

We can be forgiven this as even the GoF referred to the

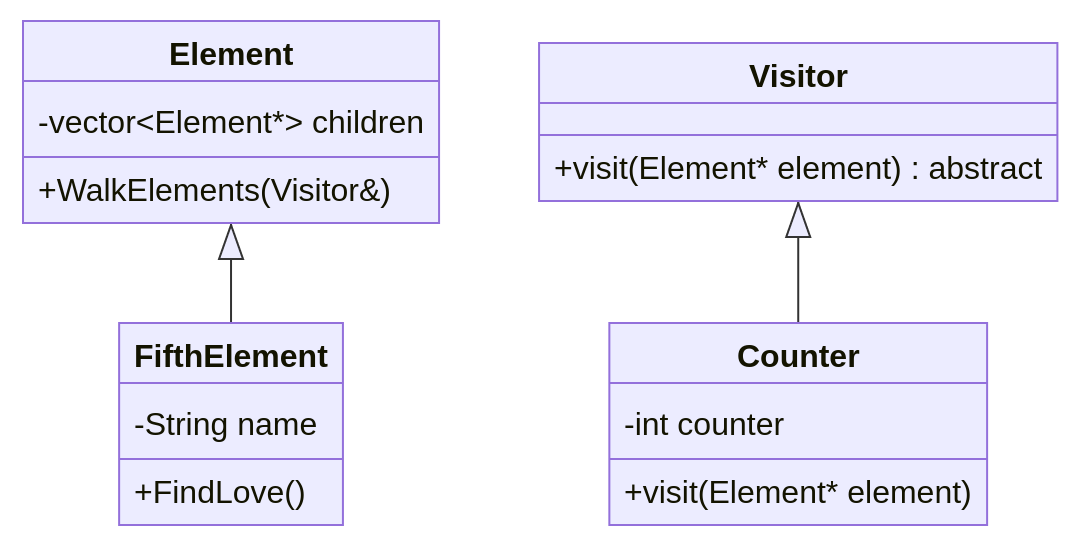

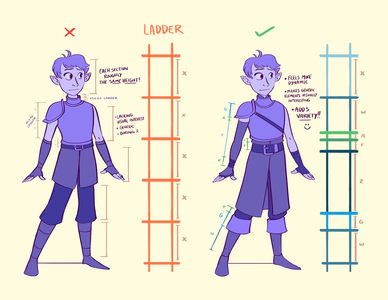

A Walking Pattern.

A Walking Pattern.

Beyond the individual names of patterns, nowadays we regularly exchange the term design pattern with technique or UX design-systems. Some authors will add ‘design patterns’ to the title of a book or webpage to instil a sense of validity to the content delivered. It is intensely dissatisfying to observe how anything that indicates a property will inevitably be mimicked and ruined; from black-and-yellow striped but non-threatening insects to five-star review bots. However, why is this dilution of meaning not seen as a problem by those involved in the patterns movement? Worse still, when it is recognised as a problem, why is it seen as somehow unavoidable?

Aside from co-opting the term, UX design patterns exhibit another problem. Even though some people complain that the GoF patterns are idiomatic, UX patterns suffer from the same flaw to a much greater extent. I explain in more detail later what idiomatic means for patterns, but compared to authentic pattern forms, idioms are less powerful, less adaptable, and often unfalsifiable.

Indeed, UX patterns don’t fit into the original definition by Christopher Alexander, but does this matter? Are idioms just as useful in practice? Given how the usage of the term has changed, what is the value in trying to fix anything about design patterns if they don’t exist anymore, except in the form of a book and some recurring names?

I will answer these questions and explore how to regain some of the benefits of patterns without requiring the whole system to be overhauled.

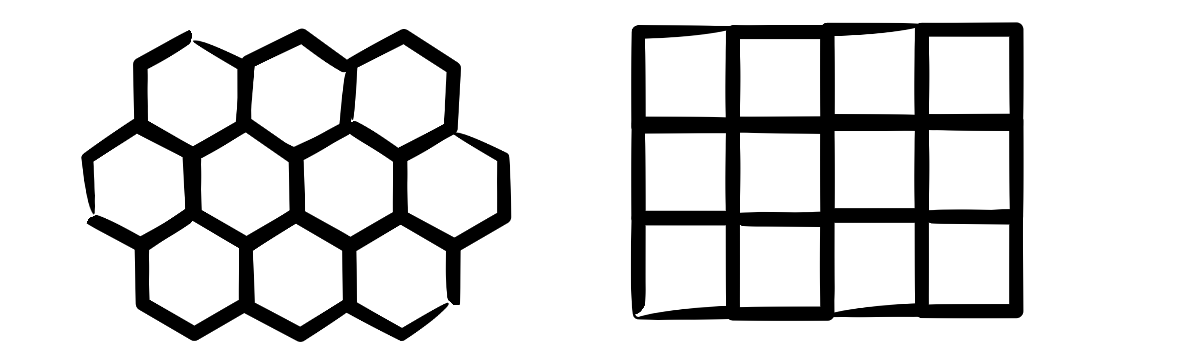

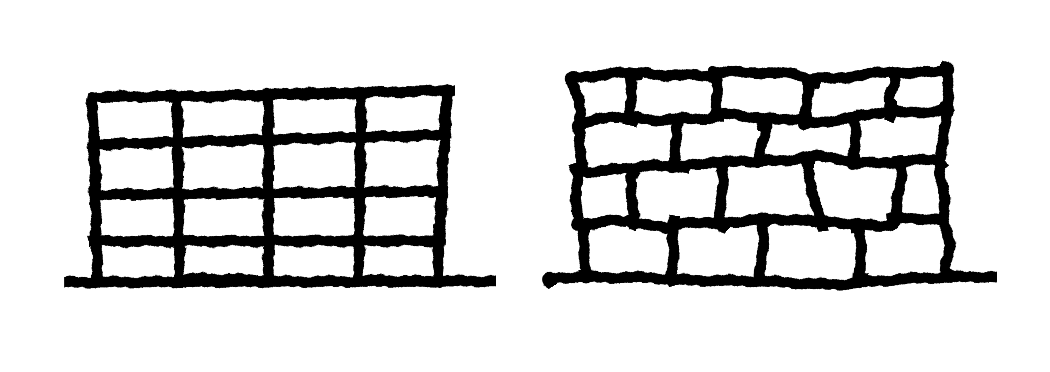

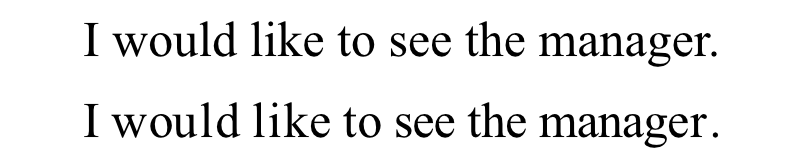

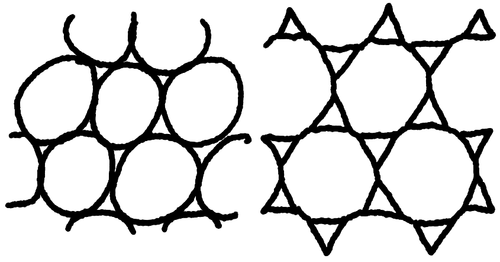

Naming is hard. ‘Our names for the patterns have changed a little along the way. “Wrapper” became “Decorator,” “Glue” became “Facade,” “Solitaire” became “Singleton,” and “Walker” became “Visitor.”’[GoF94] p. 353. We shall see later how Christopher Alexander approached this problem through the use of diagrams.

The GoF book

There is also the question of the content and form of the GoF

book[GoF94]. First published in 1994, it’s stood the test of

time for a work on computer programming. No book other than

There is also the question of why other books on patterns are not in the top

ten of best-seller lists for software design and architecture. I had to get

down into the 20s before I found another title at the time of writing. In the

lists I saw, which offered rankings2 in accordance with estimated

sales figures,

Some people have asked whether the GoF patterns are design patterns at all. In fact, more experienced people than me have called them out as idioms—while some individuals have stated the GoF’s patterns are not found in actual use anywhere3. Others claim their use only began because of the paper and the book—turning them into a kind of self-fulfilling prophecy. Why do people think the book’s patterns aren’t actually patterns? If this is true, what are they then? And what would an actual pattern look like?

Finally, there’s the question of form. There are some concerns regarding the way the patterns are structured in the book; some of them did not make complete or obvious sense when contorted into this mould, making them less practical than they could have been. The work also observes that there are significant differences4 to Christopher Alexander’s patterns and does not strictly adhere to the principles of his pattern language. This begs the following questions: why do some people think the movement could have been hurt by setting a theme and structure for patterns? What harm did this literary structure do to the power of patterns as both a device and a movement?

Even the original GoF believe some patterns need updating and others should be added, so realistically, the answer is a simple no.

One site was

https://bookauthority.org/books/best-selling-programming-books; the other

was digging into Amazon sales rankings myself. The rankings change

regularly. Sometimes, there are no other design patterns books in the top 20.

Other times, it seems

This particular point was written up by David Budgen in a study at Durham University https://www.infoq.com/articles/design-patterns-magic-or-myth/

Page 356 mentions Alexander’s patterns have an order, emphasise the problems rather than solutions, and have a generative capacity. On the following page, they admit the work is not a pattern language but ‘just a collection of related patterns’.

Systems theory

Central to the history of design patterns was a drive to tame the complexity inherent in architecture, both in terms of physical construction and software. Both forms of development deal with sequences of constructive actions with continuously interacting elements. Interactions between elements, which in turn create emergent behaviour, are what systems theory is all about. So, given how linked together they are, why do we fail to teach systems theory alongside design patterns?

We can use systems theory to analyse questions regarding the larger systems at play in the design pattern space, not only in terms of the patterns themselves but also the development of the theory of patterns. Moreover, we can examine the systemic problems caused by the current processes for finding, publishing and their eventual use. There were certain forces at play that drove the adoption of patterns, yet others have diverted them toward a different form. The pattern movement changed over time, and systems theory helps to explain and predict some of the otherwise unexpected outcomes.

In later works by Christopher Alexander1, it becomes apparent that systems of feedback between larger social entities—such as the pattern movement, the software development industry, and the construction industry—led to some far-reaching consequences. These outcomes range from the mundane, including mass selling-out, to the unnerving reality of corruption, bribery, and death threats. Systems theory will help us to frame these events so that we can see them coming before they arrive and avoid similar problems in the future.

Many of Alexander’s later works touch on systems theory without being

explicit.

Unfulfilled potential

Design patterns seemed like a fantastic thing when they first became popular. Their promise of improved code reusability was—and still is—vital to many people. However, we need to know why that potential was not realised. Given how many patterns there are, with all their combined, compiled wisdom, why were they so ineffective as a form of knowledge transfer? This point is not debatable; as they stand, they are ineffective. The lacklustre level of adoption of any but the GoF patterns in mainstream development practices is a clearer testament to the inefficacy of the movement than any statement I could make.

Large-scale, complex software requires considerable effort to get right. The work of Christopher Alexander was all about managing the complexity of a different domain. What happened to that aspect of design patterns during the concept’s infiltration into software design patterns? Why haven’t they helped to tame the complexity? Was the initial response of the software industry neglectful of his work? What can we do to put ourselves back on track? Do we need to go back further and start again? In other words, should we walk the same path as he did to derive our own truer, more fitting form of patterns for software development?

Tracing the history of how the design pattern movement affected buildings and architecture reveals another parallel with software development. When and where the patterns processes were allowed to run their course, you can see how they created significantly better final results than the alternative—the default approach of contracts and paper design. However, the determination of what is better is a value judgement from a particular perspective. According to systems theory, the world stood in the way of what was locally good because the system above viewed Christopher Alexander’s work through a different lens—a lens of power.

That skewed interpretation remains as much alive in software design pattern related activities as it does with regard the physical building space. Inevitably, this leads to similar obstructions and corruptions of the process. Is something actively stopping design patterns from achieving their potential? If so, can we do anything about it?

Where next?

I hope I have successfully whetted your appetite with these questions or, at the very least, helped you decide whether this book is for you. Either way, I have outlined the premise to be explored in these pages. My research is incomplete, and I wonder if it will ever end, as this topic is significantly broader than I initially believed. I continue to dig further as I write this first edition and will likely produce a follow-up edition a decade or so from now. If not, I hope this book will explain why I, like the GoF, never got around to it.

What I have learnt has changed my mind multiple times. I used to be a staunch design pattern sceptic. I believed patterns to be useless cargo-cult content. However, I now find them fascinating and useful but ultimately unfinished. There is much work to be done to get them to where they could be, so I lay out the measures that need to be taken. This is not a call to arms but rather a projection of what the future may hold if I, or others, engage in the necessary work. There is promise there, but no promises, I’m afraid.

Now, we must start at the beginning. A full description of the origin of the form is absent from so much of the literature on design patterns. However, as is so often the case, history holds the key to understanding. It will help unlock their potential and reveal the reasons why things went wrong for design patterns in the way they did.

The Link to Agile

To fully understand design patterns, it’s useful to trace how they evolved through the different domains of their existence and how each domain affected and was affected by design patterns. However, you’re not here for a history lesson. You’re a software developer who wants to know the relevant details with some fundamentals to back up the claims. This is why we will take a shortcut to the point where the most striking similarities between the two design patterns movements—in physical architecture and software development—seem to have materialised.

Agile, as it is understood these days, is a non-process where software is

developed and deployed according to the

At the same time as the design patterns movement in software, other changes

were brewing. The concepts at the core of Scrum can be traced back through

published works on the Portland Pattern Repository or found in

It’s not that the Agile principles came from design patterns, nor did design patterns come from Agile, but they both appear to stem from the mood of the times. Both emerged from similar feelings that there had to be a better way to develop software. A backlash formed from the notion that we could increase quality by moving decision-making closer to the place where the effect of those actions could be observed.

Christopher Alexander had shown the value of looking to the recipient and user of the product as a guiding force for decision-making; in some cases, even a worker on the building site would be deeply involved. Agile development is an attempt to bring the customer closer, even going as far as to suggest including them in live tests during development. Alexander’s work on the processes of using patterns to help guide production mirrored many aspects of the worker empowerment found in Episodes and Scrum.

The

The manifesto can be found at https://agilemanifesto.org/ and the principles can be found adjacent at https://agilemanifesto.org/principles.html There are a lot of resources available describing Agile, with many mistaking Scrum or SAFe or some other methodology for Agile itself.

Kent Beck developed the approach during his time on the Chrysler

Comprehensive Compensation System project. Some accounts put the timeline

around 1996, but the book

Ken Schwaber and Jeff Sutherland introduced the main thrust of the methodology at an OOPSLA conference in 1995, but had been using it and refining it for many years before.

For example, the usage of the term scrum and some of its

constituent activities dates back to a 1986 article titled The New New

Product Development Game by the authors of the

Complexity

For anyone reading the GoF book[GoF94], the abridged history and reference to the originator of the term ‘design pattern’ might be misleading. The information on Christopher Alexander can be found early on in the book but is quite limited in scope. When I first read this work, I imagined that he was some revered figure from an era before my grandparents were born, but the real story is much stranger and more intriguing.

Christopher Alexander was not some historical figure who lived during the height of the old British Empire. Instead, he was a modern architect working at the time of the rise of the software-design-patterns movement. However, he would have almost certainly refuted the claim that he was a modern architect, as there are many preconceptions associated with the term.

Some individuals, including myself, suggest he was a post-modernist because he understood modern architecture more deeply than most modern architects ever did. This deep understanding led to his disillusionment with the architectural institution as a whole[Grabow83], and simultaneously led to him being praised and revered for a generation as a ground-breaking architect with a vision for a new era of architectural methodologies.

When most people think of modern architecture, they think of glass and steel buildings rising in the sky. They picture cubes and off-angle constructions. They conjure images of brutalist buildings built with cutting-edge mechanical engineering techniques. All these things are modern architecture, but they are not modern methodologies. This realisation by Christopher Alexander precipitated his journey into patterns and beyond. He saw the modern way as stagnant and realised it was stymied by complexity.

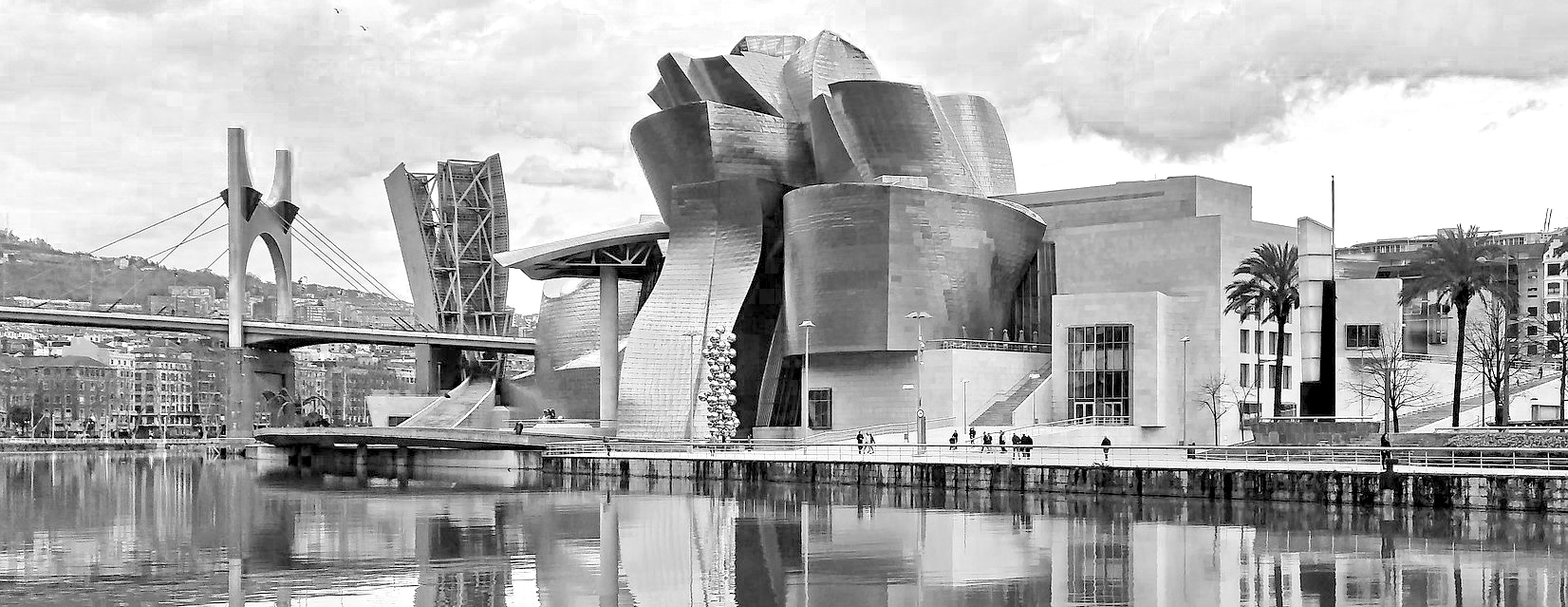

Guggenheim Museum Bilbao: modern construction, conventional planning.

Guggenheim Museum Bilbao: modern construction, conventional planning.

The mainstream understanding of building architecture was—and still is—that an architect must first produce a design, and only then can it be built. The more elaborate and complicated the design, the better the architect must be in order for the project to succeed. If a large design were commissioned, the project would need an architect who could handle the necessary thinking to produce it. They would need to spend much time in deep thought, imagining a new building from nothing and then drawing out the design. A first draft is always thought to be flawed, and so the process was geared towards assuming this was a truism and allow further iterations. Others would review it and help the architect revise the plan where necessary, but it was always a singular vision.

As any project grows, the number of interconnected pieces multiply. A single human mind cannot consider all of the effects of changing one or two small parts. Because of this, modern architecture often relies on modular parts or last-minute additions to fix impossible stresses. Modern modular construction moves the decision-making and problem-solving processes away from the construction site and back to a paper plan, trivialising the problem into an abstract form. Instead of solving a wiring or glazing issue for a specific house, modern methods use modular pieces to resolve the more general puzzle of glazing or wiring for the average house. There is a problem with this though: I don’t know anyone who is precisely average.

Such forced inattention to local details and deviations inspired Christopher

Alexander to write his original work,

From the start, Alexander’s work revolved around resolving the

problem of dealing with complexity in our world. Since we had passed the point

where traditional architectural methods—which happily adapted to changing needs at

a local scale—could no longer keep up with our new technologies, we could not go

back. Furthermore, because modern architectural methods—which could support

ever-changing technologies—could not address critical problems of

complex environmental constraints, we needed something also surpassing them.

Christopher Alexander refined his methods over the course of decades. During the period of the software design-patterns movement, his work on architectural patterns had already stabilised. However, he was then in a phase where he was working to refine even more fundamental properties of forms and processes of change. We rarely see references to this later phase within software development literature, but its absence may provide some insights into why the patterns movement ended up the way it has.

The way Christopher Alexander worked

Christopher Alexander’s processes differed from those of the prevailing

builders and architects at the time[TOE75]. Indeed, many aspects of his work were

similar to the principles espoused in the

However, his team also used other processes that were less in keeping with what we consider agile software development to be. They would use numerical methods1 to solve things, performing experiments through simulation. They would use new materials in novel ways2, offering some of the benefits of traditional materials but without their limitations. Additionally, they worked to a limited budget and kept track of what was possible3, rather than revising costs and requesting additional funding. In a critical departure from agile software development principles, they were unable to provide a constantly deliverable product as they did not have multiple working versions from which a preferred solution could be selected. Half done for them was not done at all.

Christopher Alexander built processes that facilitated faster feedback and provided the freedom to do the best thing to get that feedback while balancing it against a budget. Remember, this was the 1970s, long before DevOps4 turned up. Traditional builders had limited time and money for construction, and the same is true of any modern architectural project. In that respect, his process was no different. The difference was his commitment to producing something viable within that budget rather than pushing to have the budget increased. When the team needed to add something, they took something else away—even when this trade was thrust upon them[Battle12].

Christopher Alexander’s developments had the capacity for adjustment built in; nothing relied on everything else being just so before it was usable. However, this called for a different way of working; elements needed to be adaptable. This was why he sought out new materials. For him, it was essential to have the opportunity to make adjustments on site in response to last-minute revelations5. These revelations were the unpredictable issues brought to his attention by the process of construction, not just during, but by the feedback from the act of constructing—things no-one could have anticipated before beginning the work.

Some agile development methodologies suggest a similar approach. Deploying working code to clients early to get feedback is similar to constructing within a budget so that the client gets something, even if everything else goes wrong. Working to an overall budget and realigning as the project progresses draws parallels with the principles of working with adaptable materials and building flexibility into the process. Prototypes and spikes6 are manifestations of the cardboard cut-out approach to getting on-site feedback. Simulations of architecture mirror the making of walking skeletons in code, as both of them prove that the overall structure works as expected.

Innovation

Christopher Alexander and his team were known for inventing new processes to get things done that were realised just in time to meet their needs7. Unlike other architects, he was hands-on during his building projects, often finding new ways to achieve his goals while keeping costs low. The whole team worked like this, constantly taking into consideration all of the available information about the construction site. The availability of labour, tools, and materials guided them to discover new processes within easy reach, often undocumented or unique, but always appropriate.

Many examples exist throughout his printed works illustrating the way in which

Alexander’s team developed solutions

for specific contexts. In

Alexander paid attention to the environment right from his first project9. In 1961, while living in Gujarat, he developed a way to build a roof for a new school. Local resources were scarce. There was no wood to speak of and almost no access to transport for bringing materials in; however, pot-shaped guna tiles were readily available. His approach involved using stacks of these tiles, which made it possible to place arches in parallel lines and produce a dome. Supporting material is not free, and the self-supporting nature of the arches overcame that requirement. The outward thrust from the arches would have been a problem, but he found a solution in the nearby cotton fields; the dome was tied using the plentiful supply of tensile steel straps, which were originally intended for tying cotton bales. The whole process was suited to the materials that were available.

—Christopher Alexander, The Center for Environmental Structure,

The Nature of Order, Book 3 [NoO3-05], p. 527.

When I researched his history, I was shocked to learn that Christopher Alexander used all the available materials rather than just traditional ones. I had assumed he would have used more well-proven materials as they had easier-to-understand properties. On the contrary, he developed innovative ways of working with new materials as a regular part of his processes. This use of modern materials in novel ways meant that he could hardly be called a traditionalist.

He developed many new ways to work with concrete, challenging brutalist architecture’s dull and repetitive results[NoO3-05]. He worked with high-pressure water jets[NoO3-05], which were typically used to cut steel, to cut stone for experiments relating to decorative layout. And then there were the revelations about traditional materials, such as the way in which stout wooden beams, though costly up front, would be more environmentally friendly and cost-effective than the smaller stud forms simply due to the many hundreds of years for which the buildings might stand[NoO3-05].

Locally, but remote

Working at the site was a common requirement for Christopher Alexander, but one project10 proved he could even overcome that constraint when necessary. Although his team was not asked to complete the project, he still solved the problem of working remotely. The contract conditions included a requirement to lay all 8000m2 of flooring during a two-month window during the construction of a new concert hall in Athens.

Alexander needed access to a view of the floor and the actual materials, not merely an image of them, so his team rented out a warehouse sufficiently large to house sections of the final product. They cut tiles and placed them on fibreglass mats. When the design finally looked right, viewed as they would appear in their ultimate resting place, they glued them down. The completed mats were also cuttable, meaning they could adapt to any deviations and match unexpected errors in the borders when they arrived in Athens. The process proved that as long as you understand your goals (to view the work as close to reality as possible), a solution, along with a new tool or technique, should present itself.

Developers who stick to a plan and use existing tools, without inventing anything new to solve their problems, are probably not following an agile development process. A software developer should think about the product, how to build it, the tools they use, and how to improve those tools. Uninventive developers are lucky to be able to do a job that does not present novel requirements. But then again, perhaps they’re not so lucky after all.

Examples can be found in [NoO3-05] where they use finite element analysis for wooden structures, and [NoO4-04] for a concrete bridge structure. Other examples exist such as the reinforced concrete trusses for the Julian Street Inn.

Gunite, or sprayed concrete, is a quick-drying form of concrete shot under pressure at a target surface. It’s normally used to coat the surface of cavities or reinforce an otherwise looser form to give it rigidity. Examples include the hull of swimming pools or creating a skin for a cliff edge to reduce erosion.

There is an extensive breakdown of how they developed a budget system in [TOE75]. The aspect of budget is also covered in detail for the West Dean project in [NoO3-05] from page 238 and onward, and also the Eishin Campus project in [Battle12] where there is evidence of how the established methods naturally tend to budget extension and waste (e.g. the concrete lake slab on pg 357).

DevOps is a relatively young branch of a manufacturing and product development process of ongoing improvement and introspection, but tuned for software development.

See [TPoH85] for examples of new roof and brick design to work well with what was available and the unique requirements of the project. Many other examples can be found in [NoO3-05].

A spike is what older people like me call a proof-of-concept.

The development of the school roof is documented in

The blocks he refers to are a brand called Hebel. They provide the blocks and the necessary tools to work with the novel material. They can be cut with wood tools and readily adapt to any problem.

The project is documented in

Moving away from documentation and requirements gathering

No plan survives first contact with the enemy.

— Helmuth von Moltke the Elder (paraphrased)

It could have been the motto

of the

The

The manifesto was also making a statement by asserting that the documents typically produced during development had no inherent value. Only documentation for an extant product was valuable to the end user.

A further problem with documents typical of the time was denial of the mastery, purpose, and autonomy of the programmer. Plans were orders—something to follow. The only choice was whether to do as instructed or remove yourself from the project. This echoed Alexander’s thoughts on master plans for site development.

[T]he existence of a master plan alienates the users … After all, the very existence of a master plan means, by definition, that the members of the community can have little impact on the future shape of their community, because most of the important decisions have already been made. In a sense, under a master plan people are living with a frozen future, able to affect only relatively trivial details. When people lose the sense of responsibility for the environment they live in, and realise that they are merely cogs in someone else’s machine, how can they feel any sense of identification with the community, or any sense of purpose there?

— Christopher Alexander,

The Oregon Experiment [TOE75], p. 23-24.

However, as is often the case with an immature collective, things went too far. Documentation is for more than just the end user. It provides fertile ground for insights and elicits unexpected requirements. It offers a way to document how you arrived at your decisions and what informed them. We must also recognise that some specific forms of documentation are mandatory. Indeed, some paperwork is used to verify that we have achieved our expected outcomes and reached an arbitrary payment gate, while others may outline contractual obligations to security or safety.

People overlook the powerful effect of writing on understanding a problem. Writing it out often helps you to notice gaps in your knowledge or reveals contradictory beliefs. I studied design patterns to write this book, but the writing itself has also been an educational process.

These days, end-user documentation—the only documentation implicitly allowed—is considered an indicator of poor design as the UX design should make the application learnable without it. User manuals embody marginal value to the developer; a library should be well commented and easy to grasp, avoiding the need to refer to separate documentation.

The

So, why plan at all? Well, because planning is faster than simply doing. Planning what

you’ll cook for dinner for a week can simplify the shopping and the cooking.

Sure, things can change, but at least you have an overall idea of what you have

to work with. When you have an idea of what to work with, you can balance the

overall effort and cost of the operation. And this is what Christopher

Alexander did. His process included a lot of up-front planning. They budgeted

for parts and the selected patterns to use in the overall construction. His

work in

A preference for working software over comprehensive documentation has been interpreted as advocating for the removal of all requirements-gathering steps. However, this leads to software development without an initial phase to gather tasks, to figure out the complications, and to reduce risks by ensuring bases are covered. Does up-front requirements gathering decrease risk in practice, though?

Preparation versus risk relates to the theory of quantity over quality. How

practice, deliberate or otherwise, makes you better at that activity. It allows

for mastery and gives you new perspectives for making better decisions. It also explains

how evolution wins every game ever played. Producing an order of magnitude

more software products to show to the customer to get feedback rather than

spending days, weeks, or months studying the customer’s requirements sounds very

similar the tale of clay pots found in the book

The aforementioned tale goes like this: the teacher announced the class would be split into two groups. They would grade the quantity group solely on the weight of their work. However, they would use the traditional grading process for the quality group by basing it on the quality of a single pot. The experiment had the most curious result. The highest quality works were all produced by the quantity group. The moral of the tale is thus: To deliver the best possible output, practical experience and many iterations trumps time spent in preparation and deep study.

I would like now to relay a personal story in keeping with this tale. In college, I studied Music Technology, a course that explored the technological foundations of music and other media in the modern age. It included many aspects of music, from royalties and copyright law to physically constructing a studio. Other workshops were more musical, and among them was a series of units on the composition and production of musical tracks. Music production was the reason I had taken the course in the first place and was the joining together of many disciplines. I wanted to make music using better tools and to learn better composition techniques and songwriting skills.

My music-making capabilities gradually improved over the time I spent on these units. I took each track one at a time, possibly releasing a single worthwhile piece of music each term. That’s three months per piece. I saw how I was improving with each new track and was glad I was progressing. However, we had to decide what our final project for the course would be. I am a game developer at heart and have always wanted to make them, so I committed to a project consisting of writing all the music for a fictional computer game.

This project was a tremendous change of pace for me as, without fully realising it, I had signed up to produce more tracks for this single project than I had produced in the whole course up to that point. My portfolio of songs was somewhere in the region of ten to thirteen at the time; I cannot remember precisely. Nevertheless, I decided to produce a track for each level of the fictional game, with themed music for each act and a general motif for the whole suite. I also followed some assumed guidelines for game music, such as no chorus or refrains, just a steady mood, and reduced dynamics meaning a player could set a volume level and never have to listen to silence for very long or worry about the music being louder than the sound effects they were listening out for.

With these limitations in place and the sudden need for more than twenty tracks, which all had to be composed before the project’s due date, I started work. As I worked, I decided which instruments were core to the pieces and which were track-specific. I built up a set of practices for constructing tracks and learned how the filters and effects I used would sound even before I applied them. Overall, the time it took to create each song grew shorter as the project advanced to completion. When it came to the final piece, it took me no more than an hour from first note to final mix.

This is the part where my tale reflects the story about the clay pots. Whereas in the ceramics workshop, the teacher graded the students by weight, I was due to be graded on production quality and my understanding and implementation of the techniques we had been taught in class. I admit that I had been a very poor composer when I started the course; over time, I had improved to the point where I was merely third-rate. However, after the game-music project, the teacher who graded me said the last few tracks were the best he had heard me create. In effect, the more pieces I had completed and the more in the zone I had become, the better the individual compositions were.

More consequential for me was how, after the project, my new production quality stuck. Today, I am a wretched composer due to a lack of practice, but the sudden improvement at the time meant any music I produced after the project was elevated to a new level. For this reason, I would argue that the story of clay pots is incorrect, to some extent. Some people interpret the result of the experiment as being related to agile methodologies, such as Scrum, when they’re not.

A Scrum-driven project does not aim to build many individual products, producing one great product in passing, almost by accident. It’s still a process aiming to produce one final viable product. It’s an iterative development process. Therefore, it sits in the camp of the primary group—the group graded on the quality of a single pot. Ultimately, my evaluation was based on the quality of my final suite of music, not on how many tracks I had produced. Therefore, what we should take away from the clay-pots tale should be that it’s not the pot that gets better, but the potter.

This is an important distinction because you only get one chance to make the final product in some projects. Perhaps it’s difficult or impossible to build a larger final product from a smaller one. Iterative development might not be an option. In such situations, if you don’t look forward towards the final product and what it should be, it can lead to nothing at all. When engaging in these projects, there are often insurmountable problems that are created in ignorance during early development. For instance, consider the cost and complexity of fixing security issues after your software’s first alpha or beta release. So what the clay-pots experiment tells us is that we can be better during these projects if we understand that practice and preparation are two things which can sometimes be one thing.

In conclusion, agile development can be forward-thinking and include up-front investments, just as Christopher Alexander’s team selected design patterns to structure their projects. It can be about learning deeply enough to remove the need to look forward. Agile principles will prioritise faster development to facilitate swifter learning as well as building up good tools to make future work more manageable, just as Alexander developed new tools and materials to complete his constructions. You shouldn’t expect to fashion a great composition on your first attempt if you don’t study, but if you intersperse your studies with a hundred creative acts, your last one will be better than if you had spent the whole time with your nose in a book.

Agile approaches are suitable for training your team to get things right the first time every time you task them with a familiar project. However, this form of development can be wasteful when attempting exotic projects. This is why we need to use models and prototypes, as there will be major mistakes and lots of technical debt. Christopher Alexander strove to use flexible materials to avoid the costs of these unknowns—materials where errors could be undone or avoided, even as they emerged.

Every process depends on the wisdom of the team to instinctively know the right thing to do. Agile methods allow them to do that in the same way as the distribution of knowledge via a pattern language. It also allows them to become better at their craft through accelerated experience. If what you are building can be built up iteratively, then all the better, and in software, it usually can. Do not fear throwing away the bad work and early attempts. In fact, you should be fearful of not throwing code away.

Rebuilding whole modules from scratch will become quicker as the team grows better at making them through practice. What you cannot do safely is rewrite a module you didn’t write yourself. Also, you should rewrite early, not late, as your wisdom will have already begun to fade.

This kind of evidence might not be enough for you. You want to know why. Why is planning up-front not as effective?

Much like a gemba walk is about literally walking in the place where the work happens, a review of a plan must touch the reality of the problem space.

The right wrong thing

User stories should tell you the right thing to make, while requirements analysis should tell you how to make the thing right. However, the latter process gets some things right and other things wrong. It can reveal literal and obvious things, such as what platform you need your software to run on, how much memory it has, what can be considered an acceptable response time, or how many concurrent users you expect to reach. These numbers can, of course, be wrong. An agile development practice almost expects such answers to change, even if they are correct at the time, yet these facts are knowable at the time. They can be detected, measured, and calculated, even if they are wrong at the end of the project.

Other things are entirely unknowable such as unexpected market shifts or secret projects that are suddenly and publicly announced. No requirements analysis can gather the unforeseeable. Agile methods can prepare you to handle these, but they can’t predict them any more accurately than traditional requirements-gathering exercises. And if they don’t happen, why do we still think that agile approaches are superior?

What’s potentially knowable but often missed are the requirements we unearth when project components come online; the results of interactions between parts previously developed in isolation. These are the emergent properties and complexities. A requirements-gathering phase can occasionally pick up on a few of these if seasoned developers are present, but even if you have experienced experts onboard, you should not hope to discover more than a slim majority of them during any planning phase. For this reason, projects should have a pre-production phase. A more fruitful requirements-gathering process can occur during a prototyping or proof-of-concept development stage.

Turning to the making phase faster has two benefits. First, it creates people with wisdom. In the future, during preliminary requirements gathering on a different project, they will know what requirements there will be for the things they typically work on.

Second, this change allows you to rapidly reach the stage in development where the requirements you missed during requirements-gathering make themselves known. They appear as integration blockers or arise when completing detailed design work, occasionally manifesting as insurmountable bugs at the lower levels.

Code enforces its requirements better than documentation because conflicts in documentation need to be actively sought out. In code, conflicts rapidly become compilation or implementation impediments. There’s nothing quite as immediate and obvious as being in the middle of developing a feature and realising it’s not possible to implement it with the current API or data layout.

Christopher Alexander’s process mirrors both these benefits. Planning at the site resembles eliciting requirements by working on prototypes, while continuous integration parallels the production and reviewing of building mock-ups. The builders using Alexander’s processes were given fast feedback on what didn’t work, and became better architects at a faster rate because of it. Again, this leads to wiser builders and the swifter discovery of and recovery from emergent problems.

This process scales with experience. As the critical elements of the project increase in size, the depth of knowledge and the breadth of expertise needed by the development team grow in proportion. For large projects, the developers need to be experienced at building at least medium-sized projects. The larger the project, the more the work must be repetitive for the whole project to be completed successfully. This repetition is not like a factory line but more akin to learning how to mortice a door or frame a window; repetition with variation leads to mastery. Working as part of a team and adapting what you have learned to support the project provides purpose. Meanwhile, understanding and improving the process at the site as a trusted team member gives a sense of autonomy.

Learning is essential, and this is why teams need to stick together. It’s also why we need teams in the first place. No one can do all jobs well. Some members have to carry the knowledge for specialist areas to save time for the group. If everyone has to learn every part of a production chain, then no one knows anything intimately enough to make insightful improvements.

No process is magic; not even Christopher Alexander could invent a system by which a complete novice could build a town from nothing. But he did produce a process by which a beginner could decide to forge a village, and from that process would emerge a community of buildings and an architect.

Furthermore, writing code also has other benefits. Counterintuitively, code is simpler to change than specification. It’s not easier to alter, but it is more straightforward to be sure your work is done. Code takes time to perfect, incorporating many keystrokes and moments of grumbling over failed compilations and red test runs. But even though it takes longer to get right, it is simpler to change because when every test is green, you know you have finished. It gives you immediate feedback on repercussions and adds value as part of the change. It’s also in revision control, so there’s a record of the change. This which helps decode whether any newly introduced usage patterns were part of a vital feature or an unexpected behaviour added by accident.

Many of these aspects are not mirrored in the physical building site, but moving mock-ups around lets you see the impact of alternatives quickly and supplies immediate feedback on the repercussions. Feedback comes much faster than when drawing with a pencil on paper. Unfortunately, these physical processes of making changes do not commonly produce historical records, so decisions and reasoning of physical construction can easily be mislaid.

Problems with acting early

The problems with the action-first approach are mirrored in physical development too. When requirements change on a grand scale, such as building regulations for physical construction or hardware availability for software construction, much of the completed work is wasted and more must be done to move the project back to a new starting point. The source of requirements can vanish, such as when a construction no longer needs parking or a piece of software no longer needs a feature because the operating system now takes care of that aspect. Of course, there are also new requirements, conspicuous in hindsight, leading to regrets that there should have been a little more forethought. Then there are the horrible kinds of change whereby the requirements were gathered, but misinterpreted. The literal requirements remain unchanged, but the solution must accommodate the new interpretation.

An up-front analysis could have revealed some of these potential sources of outside interference, but performing it later leaves these risks unexplored until you can better discern their importance. Consequently, neglecting any up-front requirements analysis is a foolish way to develop anything, yet doing everything at the start is equally daft. The point must always be to reconsider your motivations. What is the state of the world, and what do you currently know about the problem? Each step is a place to stop and assess where you are now and where you need to go next.

Sequences, sprints, and smaller steps

Processes inspired by Agile principles are better than the methods we were using before, but only in the same sense that walking through puddles is better when wearing waterproof boots. The question remains: why are we walking through puddles at all?

Before Agile principles, the favoured production practice was to have big plans and continually refine them until they were ready for use in product development. Lots of documentation would be in constant flux, and no one could read it fast enough to stay up to date. Some chose to ignore the rules and work how they wished. They developed first and documented second, if at all.

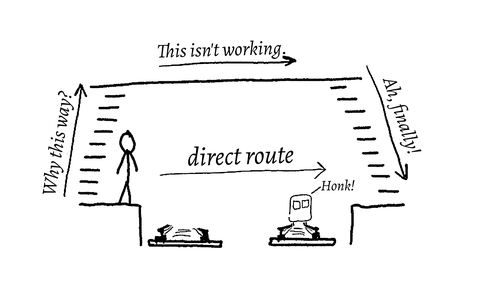

Agile development arrived as a solution emphasising direction over destination. We needed this because we see better by noting connections than by seeking out the endpoint. You often know the right direction before you know precisely where you’re going, just as you seek the door and not the room. Moreover, you predict the best course of action before seeing the result, and you should know the need before the implementation.

Christopher Alexander’s building processes included this direction-over-destination principle. His developments were carried out by applying local, stage-appropriate changes. His team regularly held reviews with the client before committing to anything they would pay for. These reviews included everything from the overall design to the colour of the details on the tiles. The significant part here is not the customer or the feedback but the regularity of the review. Rather than following instructions, they were building according to a plan by adjusting its details in response to the data resulting from the ongoing process.

In Scrum, the iteration cycle invites people to set time limits or reduce the scope of their tasks. This ensures that everyone takes the time to look up and see where they are. Scrum requires the team to meet with those who can give feedback on their direction. Then, they can appraise the situation and decide if they need to course correct. They can also inspect long-running tasks and check whether they need a change of plan. Do they need to change? Should they stop? Or can more resources be assigned so that they can be completed quicker? In effect, at any review meeting, the team receives feedback on the observable status of the project and can decide how to prioritise the remaining work, whether this leads to a change or carrying on as before. Once these decisions have been made, they decide on the next meetup date.

As children, many of us played a game of find-the-object, in which we were told

whether we were getting hotter or colder. The game was simple. If the hider saw

us getting closer to the target, they would say the word ‘hotter’, and if we

moved further away, they would call out ‘colder’. This is feedback. Satisfying

the customer through regular delivery of working software is the first

principle of the

The iteration cycles of Scrum usually assume multiple days between meetups, which can create a disconnect between the stakeholder and the team working on the project. Scrum attempts to mitigate this problem by introducing the role of product-owner. They stand in for the stakeholders, and are available to answer questions at any given time. It’s not the same as having direct access to the stakeholders, but it has other benefits. Stakeholders can avoid dealing with the poor communication skills of the development team. Having a charismatic product owner is not deceitful. In fact, it only becomes a problem when they don’t understand the stakeholder’s values.

You must act on feedback. You have to change what you do and how you do it. The order in which you make decisions is also critical, which is reminiscent of Christopher Alexander’s generative sequences1. Generative sequences is the name given to sequences of steps or refinements that produce healthy structures. A healthy structure is well-formed according to the stresses, strains, and forces surrounding it. Feedback must be timely, and a good sequence provides helpful feedback at the right time so that it can be acted upon efficiently. Consider how some building block construction toys, such as Legotm, have excellent instructions while others seem inferior. The main difference is not the printing quality but the concern with which the instructions treat the mental model of the builder. The builder must have guidance for the right thing at the right time, and each step has a context. Good design patterns and good instructions are generative sequences. Moreover, good sequences generate a form while not demanding stilted, robot-like following.

So, things created through a process of sequential decision-making steps are of a certain quality, not only due to the steps chosen but also the order in which they are taken. One sequence will have a better outcome than another sequence of the same actions. As a code-related example, consider what might happen if you build all the features first and then fix all the bugs at the end.

Some orderings will only be able to produce a disappointing and inflexible final design. An example would be developing software without considering security or performance and then trying to introduce those aspects afterwards. Other arrangements of the steps will require minimal back-tracking during the final stages.

If the order of steps affects the final design, all agile approaches help

because very few lock you into a specific order of activities. None of the

elements of the

I first came across them in

Solving the customer’s problems

A strange development occurred in architecture, whereby the involvement of the client diminished as the cost grew. You might think that someone who spends a lot of money on a project would expect to have a great deal of sway on the decisions made, but they often tend to lean towards the opposite outcome. As the cost increases, the time the architect spends consulting with the client diminishes. This reduction of influence is now expected, to the extent that Christopher Alexander was told by his clients[NoO3-05] that they were thrilled to be as involved as they were in developing their own homes. They expected his team to present them with some beautiful final form rather than taking considerable time to gauge their constraints and elicit from them all their deepest hopes and desires for their investment.

In some ways, agile development attempts to parallel this building process. There is a preference for taking a route where you keep the client in the loop, and returning to them with prototypes and mock-ups to get feedback aligns with Agile principles. However, all of the agile methodologies ought to incorporate the practical, concrete practices of Christopher Alexander when extracting those elusive, profoundly human needs. I’m not talking about the problems caused by emergent properties—those are the constraints of the built product. No, I am referring to the gap between what the customer is willing and able to ask for and what they actually want and need1. Even knowing the gap exists would be an improvement.

In the realm of architecture and building, the patterns found were all of a sort that produced environments for the end user. They cared less for the builder and very little for the ego of the owner of the completed buildings. If we consider a building contractor, a developer who funds and then rents out the building, and a potential family who will live in it for a generation or two, we can easily see the differences between them. In effect, the patterns were all about how the building was to be used in line with the actual lived experiences of the family members, not so much the building process. The affordances to the contractor who built the house tended to be those that were also good for future maintenance or for when the family wished to extend their home. In almost no instances were the patterns supportive of landlords or land developers.

As I studied design patterns, I saw an emergent property of these patterns for physical buildings, not just in relation to homes, but with regard to workshops and malls, hospitals and schools too. The patterns were almost always related to two or more of the following states in which people living in and around the building would spend most of their time.

- Rest and relaxation or recovery.

- Sustenance and personal maintenance

- Commuting and transportation.

- Communication and community.

- Labour and the care of others.

- Personal opportunity and spirituality.

- Family growth and adaptation.

These aspects of life required a different approach to building than an off-the-shelf product or modular building technique. They necessitated empathy for the specific needs of the end user. The builder needed to keep in mind the life to be lived by the inhabitants of the space once the job was complete. They needed to probe beyond surface or fleeting preferences and opinions and provide a structured but inviting approach to elicit the deepest, most enduring, and often quite trivial-sounding but fundamental needs.

Equally, an agile software developer needs to have empathy for the client. They must have a clear image of the final goal and should concentrate on how it provides for the user rather than the image the user thought of when they first commissioned the project. The user is an expert in their domain, but they are not an architect or a builder. They do not know how to ask for what they want when surrounded by examples of those requests ordinarily being considered irrelevant, insubstantial, or simply not serious.

In building and software development, the customer or client is, or at least should be, at the centre of the project. Their problems are the only essential problems to be solved. Their needs are the only needs that can be fulfilled and provide value.

Given these principles, the developer’s profit is contingent on correctly pricing effort. Profit can be increased by cutting costs or inflating the client’s investment, yet those actions would subvert the client’s needs or prove the process was not already efficient.

Gaining a solid reputation and taking that to the bank with a constant flow of work was more important when people grew their homes and extended their workplaces. Building was a sustainable practice, not a growth industry. Construction jobs were shorter, and people could gauge your work and hire you again. This is no longer true of physical builders as evidenced by the many attempts to certify trades-folk, so reputation can once again have an impact.

Unfortunately, software development started out in this position. Reputation was nonexistent for software development houses; there was no history to rely on. Those who commissioned software development usually hired in developers as there was such a small selection of large-scale project development organisations.

Nevertheless, we are now heading towards a world where reputation matters because scarcity is no longer a problem for a customer of small apps. The number of software houses that create small to medium-sized applications has grown tremendously in the last 30 years. In this new world where customers will say no, you must be recognised as someone who will solve their real problems and not introduce more as part of your process. We’re not there yet, but the frequency and size of projects developed on a for-hire basis is growing; at the same time, the number of reviews about developers is also rising.

Solving the client’s real problems requires an understanding of how to develop software and how to elicit the client’s deepest requirements. Only when you can find out what the client needs, despite their inability to ask, will you truly develop software that fulfils a need. Only when you have created a car for a client who wanted a faster horse will you know you have developed the capacity to satisfy those hidden requirements.

Some will now think of the quote, commonly misattributed to Henry Ford, about not asking his customers what they wanted as they would have only asked for a faster horse.

Slowly revealing the solution to a complex problem

Solving the puzzle of how to make something is one challenge, but knowing what to make is another one altogether. A customer could be unaware of what they need or be unable to explain it. The form of what you need to build might not even exist. In these cases, your development process could benefit from mimicking Christopher Alexander’s methods.

Many complex products are like this. Neither the user nor the customer will know what they want but they will know what they don’t like. This kind of negative-only feedback is effective, yet it can create expensive problems for those who are unprepared to work this way. Alexander always worked with a customer, resorting to keeping one in mind if they were absent similarly to how developers use personas to guide their work. Instead of guessing what they wanted, his processes were more keenly tuned to provide the things that would give them a more wholesome life. His team would take a deeper look at the patterns of the lives of those who were due to inhabit the buildings and sites once the development had ended. It’s these patterns of life that he observed most closely[Notes64]. The actions taken, the reasons why, and the values behind them all played a part in these analyses. They allowed for plans which not only satisfied the customers but also delighted them[NoO3-05].

Complexity is a word with a loose meaning in common parlance; however, there is a specific meaning of the term that Christopher Alexander managed to tame. It is therefore worth explaining what the word should mean to anyone interested in his solution. We may have some idea of the term when we consider complex projects; for example, they might include formidable problems or things made of many parts. However, I want to more concretely define the term and bind it to one particular way of thinking before using the word as much as I will in later chapters.

I encountered a better way to judge complexity when watching a talk by Rich Hickey entitled ‘Simple Made Easy’1. Everyone can benefit from watching the lecture, as the content is broadly applicable, even if you don’t know anything about Clojure. (I certainly don’t.)

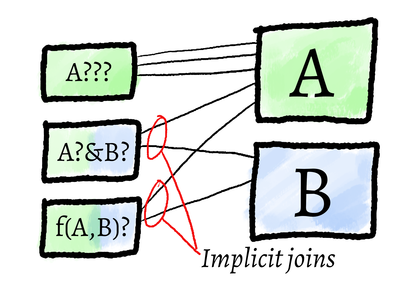

Complexity and simplicity are not concerned with how difficult or large a system is but how independent the inputs are from each other. A system with a thousand inputs and a thousand outputs, all wired one-to-one, is simple. However, a system with only two or three inputs, with every output depending on every input, becomes complex. One example is a throttle and clutch-driven car in which the movement force is a product of both the engine speed and the clutch commanded by a dynamic balancing act. There are only two inputs and one output, but anyone who has driven a clutched drivetrain vehicle can attest to the fact that it takes some practice to learn the complex interaction of those two inputs.

In software projects, the metric for complexity can be the number of things that need to change in response to any change you intend to make. You can measure at the code level and count the variables driving an output; those coupled together like a throttle and clutch.

Complexity can arise from technological choices. Lazy evaluation can have complex performance implications, and the use of smart pointers can entangle object lifetimes. Concurrency primitives for locking shared resources can deadlock at the most unexpected of times.

All of these have something in common—the elements alone are not a problem, in and of themselves, but challenging situations emerge from their relationships.

Now we have defined complexity, we need to specify how we will use the word ‘complicated’. Something with many parts can be complicated. A complicated sequence or set of related things must be connected in a specific order. The elements or steps only interact in so much as they depend on each other in a strict ordering in time or space. When you change one part of a complicated system, you have a small surface area for change propagation. There is a linear chain of effects. The effect of a change does not ripple back around to the element instigating the change, and the changes in the ripple are simple but frequently tedious (see protocols, build sequences, taxes and expenses). They are all complicated processes, but they are often predictable systems. They are knowable. It’s possible to accurately estimate the total impact of your input before you have seen the output.

Given this definition of complexity, we can now pose some questions. First, how does one tame complexity in a project? Second, what did Christopher Alexander do to rein in the complexity inherent in a building project? Finally, do these measures align with software development and Agile principles?

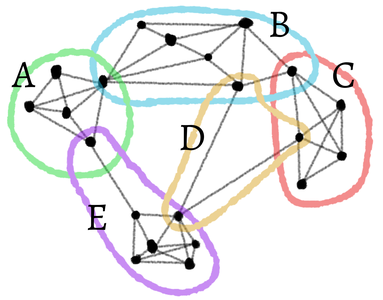

Regarding the first question, is it even possible to tame complexity? The

answer is yes to some extent, and one way is documented in

This certainly does not sound like the agile development practices we know. It also does not appear to address complexity. But let’s review for a moment. Christopher Alexander tamed complexity by understanding what it was. It was the coupling of changes. So, rather than creating a hierarchical architectural plan where each part finds a place in a group with others based on our prejudices, he grouped them by the strength of their change coupling alone. He would continually ask questions like, “If I change this part of the design, will that other part have to change too? And if so, does it improve with it, or does it make things worse for it?”

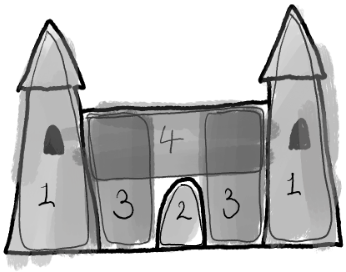

The

- Gathering all the elements that must be considered as part of the design

- Revealing connections based on their complexity—how they affect one another

- Finding the weakest-linked large groups, splitting them into separate conceptual groups, and finding a way to name or make a diagram of them.

- Within each group, repeating the search for the weakest connections and splitting them again.

- Continuing the splitting process like this until all solutions for all subsets of elements seem trivial.

The above process was recursive. Large cutting lines were formulated first, and then those groups were cut up in the same fashion. In this way, his early work revealed connected pieces of our environment. As he worked on more projects, he found some connections occurring repeatedly. Consistently naming these recurring situations led to the discovery of patterns. With patterns, he could resolve parts of a complex system without the burden of the concrete problem biasing the solution. He could now avoid the XY problem, being wise to the deeper need hidden beneath a shallow problem definition.

Many recurring collections of coupled elements tended to distil down to only a few happy, balanced solutions. However, these patterns were not actually solutions; they were the common properties of the organisations of elements solving those problems. The patterns were the pairing of the problems to be solved and what would be true for any good quality solution.

In a complex project, if you reduce the number of connections between elements or make the relationships visible, adjustments and estimates become easier to predict and contain. Changes in a project based on preconceived conceptual groupings cause cascading effects and induce further stresses throughout the system.

Adjusting the style of a window frame can affect whether a window can provide enough light to make it worth installing in the first place. For example, with the advent of UPVC windows, given the requirement for approximately 10cm of border around the glazing of an openable window, it is hardly worth the effort to build window spaces less than 40cm wide.

Furthermore, a style change can affect the number of bricks needed or whether a wall needs a reinforced steel lintel. Physical limits frequently dictate whether a design is valid or worthwhile. The availability of materials should too, but so often you see examples of people shipping halfway across the world just to have precisely what they ordered. In a sense, this precision and modularity based approach could be considered environmentally unfriendly.

With code, we see similar patterns of complication. You might include a global or a

Using reality to reveal coupling

Christopher Alexander’s solution to discovering how elements interact was to be at the site. He used many mock-ups with bricks placed dry, cardboard constructs and painted paper strapped to wooden frames, as well as anything else his team could do to trial an idea before it had to be, quite literally, set in concrete. This is like prototyping for software developers. Proofs of concept take us from untested hopes to feasible options or moments of clarity and despair.

Christopher Alexander used mock-ups to discover what you can’t extrapolate from paper designs, including how objects inside and out obscure or bounce light. The choice of an angle and colour combination may affect your mood but go unnoticed on a paper plan. How a view looks through a window may cause people to linger there, and so we see more passing space is required to navigate around those captivated by the vista.

We engage in a similar behaviour when doing exploratory programming, trying out ideas in the source rather than relying on paper designs or mental modelling. We make quick changes to prove that an idea might work in practice. Then we roll it all back and start properly. Using a spike like this shows us how the development will progress if we commit to it. We can identify how it might feel to build upon it later or even how it might be for the end user via a UX mock-up.

The most pertinent aspect is that the speculative activity should be done in the place itself, the actual site where the final work will eventually be committed. This means that you get to see how it impacts the final form, rather than just guessing at the impact. Unlike how when you work away from the main area and integrate at the last minute you learn of the problems quite late. The value of this activity is that you are always building towards gaining hindsight; effectively, you are already wiser before you lay the first stone.

In

We often only see the shape of the solution as we draw very close to the end of a project. The form of the problem is similarly revealed quite late. This is not a coincidence. We would not need to iterate if we fully understood the problem we were trying to solve or all of the problems inherent in any solution—if it were, software development would simply be a matter of data entry.

Development as a puzzle

You can compare this to puzzles. When you first encounter a puzzle, you are given it in literal form. Whether it be a word puzzle, a wooden or metal toy, a puzzle cube or a sudoku, when you first encounter it, you only have a simple concept of what the outcome should resemble. This is much like your customer’s goal. You have yet to learn how to get there but can visualise the final state.

The more you poke around at the puzzle, the more you learn about it. You uncover the sub-problems and generate local solutions. You’re no longer solving the original problem; you’re solving the steps towards it and generating new words for states you see and actions you can take. This is very much like programming, which is why people often say you need to plan again once you know more. They mean that you need to replan once you understand more about the problem and its sub-problems and have metrics for the value of the sub-problems’ solutions.